What is the “dying ReLU” problem in neural networks?

-

16-10-2019 - |

Question

Referring to the Stanford course notes on Convolutional Neural Networks for Visual Recognition, a paragraph says:

"Unfortunately, ReLU units can be fragile during training and can "die". For example, a large gradient flowing through a ReLU neuron could cause the weights to update in such a way that the neuron will never activate on any datapoint again. If this happens, then the gradient flowing through the unit will forever be zero from that point on. That is, the ReLU units can irreversibly die during training since they can get knocked off the data manifold. For example, you may find that as much as 40% of your network can be "dead" (i.e. neurons that never activate across the entire training dataset) if the learning rate is set too high. With a proper setting of the learning rate this is less frequently an issue."

What does dying of neurons here mean?

Could you please provide an intuitive explanation in simpler terms.

Solution

A "dead" ReLU always outputs the same value (zero as it happens, but that is not important) for any input. Probably this is arrived at by learning a large negative bias term for its weights.

In turn, that means that it takes no role in discriminating between inputs. For classification, you could visualise this as a decision plane outside of all possible input data.

Once a ReLU ends up in this state, it is unlikely to recover, because the function gradient at 0 is also 0, so gradient descent learning will not alter the weights. "Leaky" ReLUs with a small positive gradient for negative inputs (y=0.01x when x < 0 say) are one attempt to address this issue and give a chance to recover.

The sigmoid and tanh neurons can suffer from similar problems as their values saturate, but there is always at least a small gradient allowing them to recover in the long term.

OTHER TIPS

Let's review how the ReLU (Rectified Linear Unit) looks like :

The input to the rectifier for some input $x_n$ is $$z_n=\sum_{i=0}^k w_i a^n_i$$ for weights $w_i$, and activations from the previous layer $a^n_i$ for that particular input $x_n$. The rectifier neuron function is $ReLU = max(0,z_n)$

Assuming a very simple error measure

$$error = ReLU - y$$

the rectifier has only 2 possible gradient values for the deltas of backpropagation algorithm: $$\frac{\partial error}{\partial z_n} = \delta_n = \left\{ \begin{array}{c l} 1 & z_n \geq 0\\ 0 & z_n < 0 \end{array}\right.$$ (if we use a proper error measure, then the 1 will become something else, but the 0 will stay the same) and so for a certain weight $w_j$ : $$\nabla error = \frac{\partial error}{\partial w_j}=\frac{\partial error}{\partial z_n} \times \frac{\partial z_n}{\partial w_j} = \delta_n \times a_j^n = \left\{ \begin{array}{c 1} a_j^n & z_n \geq 0\\ 0 & z_n < 0 \end{array}\right.$$

One question that comes to mind is how actually ReLU works "at all" with the gradient $=$ 0 on the left side. What if, for the input $x_n$, the current weights put the ReLU on the left flat side while it optimally should be on the right side for this particular input ? The gradient is 0 and so the weight will not be updated, not even a tiny bit, so where is "learning" in this case?

The essence of the answer lies in the fact that Stochastic Gradient Descent will not only consider a single input $x_n$, but many of them, and the hope is that not all inputs will put the ReLU on the flat side, so the gradient will be non-zero for some inputs (it may be +ve or -ve though). If at least one input $x_*$ has our ReLU on the steep side, then the ReLU is still alive because there's still learning going on and weights getting updated for this neuron. If all inputs put the ReLU on the flat side, there's no hope that the weights change at all and the neuron is dead.

A ReLU may be alive then die due to the gradient step for some input batch driving the weights to smaller values, making $z_n < 0$ for all inputs. A large learning rate amplifies this problem.

As @Neil Slater mentioned, a fix is to modify the flat side to have a small gradient, so that it becomes $ReLU=max(0.1x,x)$ as below, which is called LeakyReLU.

ReLU neurons output zero and have zero derivatives for all negative inputs. So, if the weights in your network always lead to negative inputs into a ReLU neuron, that neuron is effectively not contributing to the network's training. Mathematically, the gradient contribution to the weight updates coming from that neuron is always zero (see the Mathematical Appendix for some details).

What are the chances that your weights will end up producing negative numbers for all inputs into a given neuron? It's hard to answer this in general, but one way in which this can happen is when you make too large of an update to the weights. Recall that neural networks are typically trained by minimizing a loss function $L(W)$ with respect to the weights using gradient descent. That is, weights of a neural network are the "variables" of the function $L$ (the loss depends on the dataset, but only implicitly: it is typically the sum over each training example, and each example is effectively a constant). Since the gradient of any function always points in the direction of steepest increase, all we have to do is calculate the gradient of $L$ with respect to the weights $W$ and move in the opposite direction a little bit, then rinse and repeat. That way, we end up at a (local) minimum of $L$. Therefore, if your inputs are on roughly the same scale, a large step in the direction of the gradient can leave you with weights that give similar inputs which can end up being negative.

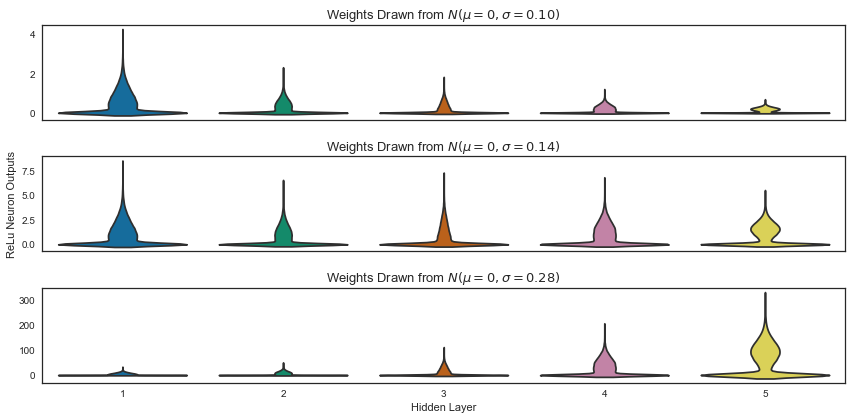

In general, what happens depends on how information flows through the network. You can imagine that as training goes on, the values neurons produce can drift around and make it possible for the weights to kill all data flow through some of them. (Sometimes, they may leave these unfavorable configurations due to weight updates earlier in the network, though!). I explored this idea in a blog post about weight initialization -- which can also contribute to this problem -- and its relation to data flow. I think my point here can be illustrated by a plot from that article:

The plot displays activations in a 5 layer Multi-Layer Perceptron with ReLU activations after one pass through the network with different initialization strategies. You can see that depending on the weight configuration, the outputs of your network can be choked off.

Mathematical Appendix

Mathematically if $L$ is your network's loss function, $x_j^{(i)}$ is the output of the $j$-th neuron in the $i$-th layer, $f(s) = \max(0, s)$ is the ReLU neuron, and $s^{(i)}_j$ is the linear input into the $(i+1)$-st layer, then by the chain rule the derivative of the loss with respect to a weight connecting the $i$-th and $(i+1)$-st layers is

$$ \frac{\partial L}{\partial w_{jk}^{(i)}} = \frac{\partial L}{\partial x_k^{(i+1)}} \frac{\partial x_k^{(i+1)}}{\partial w_{jk}^{(i)}}\,. $$

The first term on the right can be computed recursively. The second term on the right is the only place directly involving the weight $w_{jk}^{(i)}$ and can be broken down into

$$ \begin{align*} \frac{\partial{x_k^{(i+1)}}}{\partial w_{jk}^{(i)}} &= \frac{\partial{f(s^{(i)}_j)}}{\partial s_j^{(i)}} \frac{\partial s_j^{(i)}}{\partial w_{jk}^{(i)}} \\ &=f'(s^{(i)}_j)\, x_j^{(i)}. \end{align*} $$

From this you can see that if the outputs are always negative, the weights leading into the neuron are not updated, and the neuron does not contribute to learning.

To be more specific in language, while the local gradient of ReLU (which is $1$) multiply the gradient that flow-back because of back-propagation, the result of the updated gradient could be a large negative number (if the gradient that flow-back is a large negative number).

Such large negative updated gradient produce a large negative $w_i$ when learning rate is relatively big, hence will repress updates that going to happen in this neuron, since is almost impossible to put up a large positive number to offset the large negative number brought by that "broken" $w_i$.

The "Dying ReLU" refers to neuron which outputs 0 for your data in training set. This happens because sum of weight * inputs in a neuron (also called activation) becomes <= 0 for all input patterns. This causes ReLU to output 0. As derivative of ReLU is 0 in this case, no weight updates are made and neuron is stuck at outputting 0.

Things to note:

- Dying ReLU doesn't mean that neuron's output will remain zero at the test time as well. Depending on distribution differences this may or may not be the case.

- Dying ReLU is not permanent dead. If you add new training data or use pre-trained model for new training, these neurons might kick back!

- Technically Dying ReLU doesn't have to output 0 for ALL training data. It may happen that it does output non-zero for some data but number of epochs are not enough to move weights significantly.