How to measure performance in a C++ (MFC) application?

-

02-07-2019 - |

Question

What good profilers do you know?

What is a good way to measure and tweak the performance of a C++ MFC application?

Is Analysis of algorithms really neccesary? http://en.wikipedia.org/wiki/Algorithm_analysis

Solution

I strongly recommend AQTime if you are staying on the Windows platform. It comes with a load of profilers, including static code analysis, and works with most important Windows compilers and systems, including Visual C++, .NET, Delphi, Borland C++, Intel C++ and even gcc. And it integrates into Visual Studio, but can also be used standalone. I love it.

OTHER TIPS

If you're (still) using Visual C++ 6.0, I suggest using the built-in profiler. For more recent versions you could try Compuware DevPartner Performance Analysis Community Edition.

For Windows, check out Xperf which ships free with the Windows SDK. It uses sampled profile, has some useful UI, & does not require instrumentation. Quite useful for tracking down performance problems. You can answer questions like:

Who is using the most CPU? Drill down to function name using call stacks. Who is allocating the most memory? Who is doing the most registry queries? Disk writes? etc. You will be quite surprised when you find the bottlenecks, as they are probably not where you expected!

It's been a while since I profiled unmanaged code, but when I did I had good results with Intel's vtune. I'm sure somebody else will tell us if that's been overtaken.

Algorithmic analysis has the potential to improve your performance more profoundly than anything you'll find with a profiler, but only for certain classes of application. If you operate over reasonably large sets of data, algorithmic analysis might find ways to be more efficient in CPU/Memory/both, but if your app is mainly form-fill with a relational database for storage, it might not offer you much.

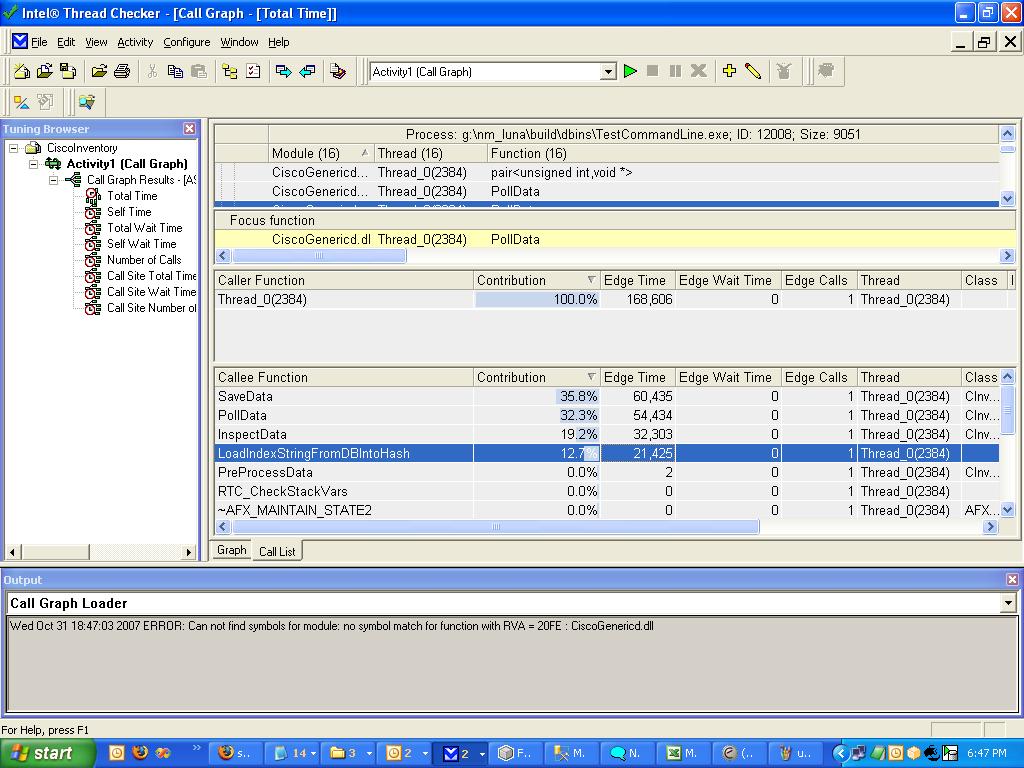

Intel Thread Checker via Vtune performance analyzer- Check this picture for the view i use the most that tells me which function eats up the most of my time.

I can further drill down inside and decompose which functions inside them eats up more time etc. There are different views based on what you are watching (total time = time within fn + children), self time (time spent only in code running inside the function etc).

This tool does a lot more than profiling but i haven't explored them all. I would definitely recommend it. The tool is also available for downloading as a fully functional trial version that can run for 30 days. If you have cost constraints, i would say this window is all that you require to pin point your problem.

Trial download here - https://registrationcenter.intel.com/RegCenter/AutoGen.aspx?ProductID=907&AccountID=&ProgramID=&RequestDt=&rm=EVAL&lang=

ps : I have also played with Rational Rational but for some reason I did not take much to it. I suspect Rational might be more expensive than Intel too.

Tools (like true time from DevPartner) that let you see hit counts for source lines let you quickly find algorithms that have bad 'Big O' complexity. You still have to analyse the algorithm to determine how to reduce the complexity.

I second AQTime, having both AQTime and Compuwares DevPartner, for most cases. The reason being that AQTime will profile any executable that has a valid PDB file, whereas TrueTime requires you to make an instrumented build. This greatly speeds up and simplifies ad hoc profiling. DevPartner is also quite a bit more expensive if this is an issue. Where DevPartner comes into its own is with BoundsChecker, which I still rate as a better tool for catching leaks and overwrites than AQTimes execution profiler. TrueTime can be slighly more accurate than AQTime, but I have never found this to be an issue.

Is profiling worthwhile, IMO yes, if you need performance gains on a local application. I think you also learn a lot about how your program and algorithms really work, and the cost implications of using certain types of object classes for storing and iterating through your data.

Glowcode is a very nice profiler (when it works). It can attach to a running program and requires only symbol files - you don't need to rebuild.

Some versions pf visual studio 2005 (and maybe 2008) actually come with a pretty good performance profiler.

if you have it it should be available under the tools menu

or you can search for a way to open the "performance explorer" window to start a new performance session.

A link to MSDN

FYI, Some versions of Visual Studio only come with a non-optimizing compiler. For one of my my MFC apps if I compile it with MINGW/MSYS ( gcc compiler ) with -o3 then it runs about 5-10x as fast as my release compile with Visual Studio.

For example I have an openstreetmap xml compiler and it takes about 3 minutes ( the gcc compiled version) to process a 2.7GB xml file. My visual studio compile of the same code takes about 18 minutes to run.