I'll try another way to explain it here then. :)

The short answer is: the unit of your cartesian positions doesn't matter as long as you keep it homogeneous, ie as long as you apply this unit both to your scene and to your camera.

For the longer answer, let's go back to the formula you used...

With:

dthe relative Cartesian coordinatessthe size of your printable surfacerthe size of your "sensor" / recording surface (ier_xandr_ythe size of the sensor andr_zits focal length)bthe position on your printable surface

.. and do the pseudo dimensional analysis. We have:

[PIXEL] = (([LENGTH] x [PIXEL]) / ([LENGTH] * [LENGTH])) * [LENGTH]

Whatever you use as unit for LENGTH, it will be homogenized, ie only the proportion is kept.

Ex:

[PIXEL] = (([MilliM] x [PIXEL]) / ([MilliMeter] * [MilliMeter])) * [MilliMeter]

= (([Meter/1000] x [PIXEL]) / ([Meter/1000] * [Meter/1000])) * [Meter/1000]

= 1000 * 1000 / 1000 /1000 * (([Meter] x [PIXEL]) / ([Meter] * [Meter])) * [Meter]

= (([Meter] x [PIXEL]) / ([Meter] * [Meter])) * [Meter]

Back to my explanations on your other thread:

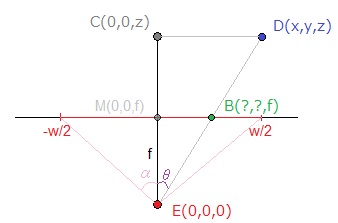

If we use those notations to express b_x:

b_x = (d_x * s_x) / (d_z * r_x) * r_z

= (d_x * w) / (d_z * 2 * f * tan(α)) * f

= (d_x * w) / (d_z * 2 * tan(α)) // with w in px

Wheter you use (d_x, d_y, d_z) = (X,Y,Z) or (d_x, d_y, d_z) = (1000*X,1000*Y,1000*Z), the ratio d_x / d_z won't change.

Now for the reasons behind your problem, you should maybe check if you apply the correct unit to the position of your camera / to its distance to the scene too. Check also your α or the unit of the focal length, depending on which one you use.

If think the later suggestion is the most likely. It can be easy to forget to also apply the right unit to the characteristics of your camera.